さあ、はじめよう!

Linuxサーバー上でDockerを使ったSmallTrainのデモンストレーション

LinuxサーバーのDocker上で、スモールデータをSmallTrainに学習させる

ここでは、一例として、MacOSを使用してDGX STATIONにSmallTrainをインストールおよびセットアップする方法を確認できます。CIFAR-10のデータを使った学習デモを体験できます。

お手持ちのLinuxサーバーなど、ご自分の環境に合わせた設定に適宜変更・調整を加えてください。

環境例: Linuxサーバー(Ubuntu 18.04のNVIDIA DGX Station)、ローカル端末(MacOS)

- Docker とは?

- Dockerは、アプリケーションを簡単にセットアップ、実行できるソフトウェアプラットフォームです。Dockerではコンテナと呼ばれる単位でソフトウェアをパッケージ化して管理できます。 また、Dockerはマルチプラットフォームで、LinuxやWindows、MacOSなどのマシン上で同じように動作します。 つまり、Dockerを使うことで、SmallTrainをお手持ちのマシン上でコンテナ単位でセットアップし実行することができます。

- Docker compose とは?

- Docker composeは、複数のDockerコンテナを設定、実行するためのツールです。Docker composeを使うことにより、同じホストマシン上に複数のDockerコンテナをセットアップし、コンテナ間で通信させることができます。

(Docker compose を

docker-composeと表記する場合があります。docker-composeはDocker composeのコマンド名です)

- Docker composeは、複数のDockerコンテナを設定、実行するためのツールです。Docker composeを使うことにより、同じホストマシン上に複数のDockerコンテナをセットアップし、コンテナ間で通信させることができます。

(Docker compose を

(ここでは、Dockerをインストール済みの状態ではじめます。)

docker-composeのバージョンをチェックし、インストールされているかを確認します。

on the host

$ docker-compose -v

docker-compose version 1.22.0, build f46880fe

docker-composeのバージョンが確認できない場合は、docker-composeインストールします。

on the host by host sudoers

$ sudo curl -L "https://github.com/docker/compose/releases/download/1.22.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

$ sudo chmod +x /usr/local/bin/docker-compose

SmallTrainリポジトリをクローンします。

on the host

$ mkdir -p ~/github/geek-guild/

$ cd ~/github/geek-guild/

$ git clone https://github.com/geek-guild/smalltrain.git

GGUtilsリポジトリをクローンします。

on the host

$ mkdir -p ~/github/geek-guild/

$ cd ~/github/geek-guild/

$ git clone https://github.com/geek-guild/ggutils.git

dockerが実行されていない場合は、dockerを実行します。但し、sudoers権限が必要です。

on the host by host sudoers

$ sudo service docker start

SmallTrain用のDocker bridge networkを作成します。

Dockerのブリッジ・ネットワークでは、同じブリッジ・ネットワーク上に接続しているコンテナは、お互いに通信できます。

- Bridge network name:

smalltrain_network - Subnet: 172.28.0.0/24

- Gateway: 172.28.0.1

on the host

$ docker network create -d bridge smalltrain_network --gateway=172.28.0.1 --subnet=172.28.0.0/24

Dockerコンテナを実行します。

Dockerスクリプトを実行する前に、Dockerスクリプトの環境設定ファイルを確認してみましょう。

設定ファイルは、先ほどクローンしたSmallTrainリポジトリ下のdockerディレクトリ~/github/geek-guild/smalltrain/docker/下の.envという名前です。

on the host

# dockerディレクトリに移動

$ cd ~/github/geek-guild/smalltrain/docker/

# 設定ファイル .env を開く

$ less .env

設定ファイル.envの内容はデフォルトで次のようになっています。

COMPOSE_PROJECT_NAME=default

NVIDIA_VISIBLE_DEVICES=0

SMALLTRAIN_ROOT=~/github/geek-guild/smalltrain

GGUTILS_SRC_ROOT=~/github/geek-guild/ggutils

REDIS_ROOT=~/redis

SMALLTRAIN_IP_ADDR=172.28.0.2

REDIS_IP_ADDR=172.28.0.3

DATA_ROOT=~/data/

TENSORBOARD_PORT=6006

JUPYTER_NOTEBOOK_PORT=8888

すでに複数のSmallTrain Dockerを実行している場合は、お互いの設定(コンテナ名やIPアドレスなど)が干渉しないよう、設定を変更する必要があります。ファイルの設定の方法は、Docker環境設定の方法を参照してください。

設定ファイルを確認できたら、docker-compose up -dコマンドでDockerスクリプトを実行してDockerコンテナをセットアップ、実行します。

on the host

# SmallTrain

$ cd ~/github/geek-guild/smalltrain/docker/

$ docker-compose up -d

Building smalltrain

Step 1/18 : FROM nvcr.io/nvidia/tensorflow:19.10-py3

...

Creating smalltrain ... done

命令したSmallTrain Dockerコンテナが実行できたかどうかと、そのCONTAINER IDを確認します。

on the host

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

YYYYYYYYYYYY docker_smalltrain-redis "docker-entrypoint.s…" 15 minutes ago Up 15 minutes 0.0.0.0:6379->6379/tcp, 0.0.0.0:16379->16379/tcp smalltrain-redis

XXXXXXXXXXXX docker_smalltrain "/usr/local/bin/entr…" 15 minutes ago Up 15 minutes 0.0.0.0:6006->6006/tcp smalltrain

実行中のSmallTrain Dockerコンテナのログを確認します。

on the host

$ CONTAINER_ID=XXXXXXXXXXXX

$ docker logs $CONTAINER_ID

...

Exec operation id: IR_2D_CNN_V2_l49-c64_TUTORIAL-DEBUG-WITH-SMALLDATASET-20200708-TRAIN

nohup: appending output to 'nohup.out'

ホスト上でGPUの使用状況を確認します。

on the host

$ watch -n 1 nvidia-smi

Every 1.0s: nvidia-smi gg-sta-20200116-volta: Tue Jan 21 10:42:27 2020

Tue Jan 21 10:42:27 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 418.67 Driver Version: 418.67 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla V100-DGXS... On | 00000000:07:00.0 On | 0 |

| N/A 32C P0 37W / 300W | 316MiB / 32475MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-DGXS... On | 00000000:08:00.0 Off | 0 |

| N/A 33C P0 35W / 300W | 1MiB / 32478MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 2 Tesla V100-DGXS... On | 00000000:0E:00.0 Off | 0 |

| N/A 34C P0 104W / 300W | 2893MiB / 32478MiB | 23% Default |

+-------------------------------+----------------------+----------------------+

| 3 Tesla V100-DGXS... On | 00000000:0F:00.0 Off | 0 |

| N/A 32C P0 36W / 300W | 1MiB / 32478MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 6903 G /usr/lib/xorg/Xorg 40MiB |

| 0 7058 G /usr/bin/gnome-shell 148MiB |

| 0 40041 G /usr/lib/xorg/Xorg 39MiB |

| 0 40082 G /usr/bin/gnome-shell 86MiB |

| 2 1441 C python 2879MiB |

+-----------------------------------------------------------------------------+

- Check that the GPU device which set with environment value (e.g.

NVIDIA_VISIBLE_DEVICES=2) is running.

SmallTrain Dockerコンテナにログインします。

on the host

$ docker exec -it $CONTAINER_ID /bin/bash

ここまでの操作ログを確認します。

on the container

# ログを確認します。

$ less /var/smalltrain/logs/IR_2D_CNN_V2_l49-c64_TUTORIAL-DEBUG-WITH-SMALLDATASET-20200708-TRAIN.log

2020-01-20 14:34:45.125276: I tensorflow/stream_executor/platform/default/dso_loader.cc:42] Successfully opened dynamic library libcudart.so.10.1

...

========================================

step 99, test accuracy 0.905491

========================================

test cross entropy 0.336935

save model to save_file_path:/var/data/smalltrain/results/model/IR_2D_CNN_V2_l49-c64_TUTORIAL-DEBUG-WITH-SMALLDATASET-20200708-TRAIN/model-nn_lr-0.0001_bs-128.ckpt

new_learning_rate:0.0001

DONE train data

====================

DONE train dataと表示されていれば、スモールデータによる学習のデモが完了しています。初回はデータセットを作成するため数分を要します。

これでSmallTrainが立ち上がりました!

学習・予測結果の確認

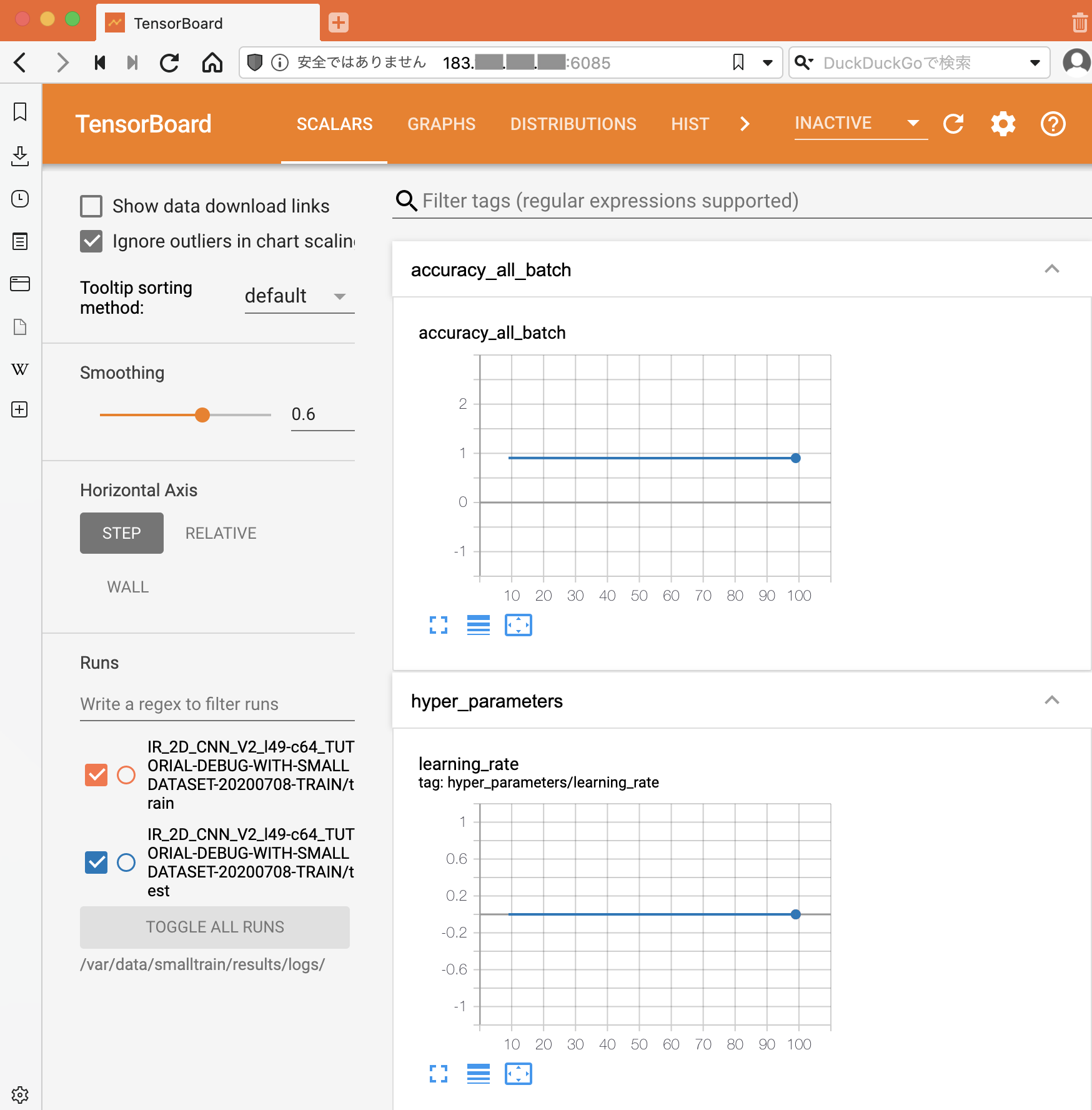

TensorBoardで確認してみましょう。

-

ブラウザから

http://<YOURHOST>:<TENSORBOARD_PORT>にアクセスし、TensorBoardの画面を確認します。(<TENSORBOARD_PORT>にはDocker環境設定で設定したTENSORBOARD_PORTの値を入力してください。) -

次のような画面が表示されます。

-

もし、TensorBoardにアクセスできない場合は、コンテナ上でTensorBoardが実行されているかどうかを確認し、実行します。

on the container

# TensorBoardの実行を確認

$ ps -ef | grep tensorboard

- 上記の実行結果、下記のような表示がなければ、TensorBoardが実行されていません。

root 361 1 2 04:50 pts/0 00:00:12 /usr/bin/python /usr/local/bin/tensorboard --logdir /var/data/smalltrain/results/logs/

- TensorBoardを実行します。

$ nohup tensorboard --logdir /var/data/smalltrain/results/logs/ &

出力ファイルの確認をします。

- SmallTrainの学習・予測結果は、csvファイルなどの形で、reportディレクトリに出力されます。

on the container

# reportディレクトリの情報を表示します。

$ ls -l /var/data/smalltrain/results/report/IR_2D_CNN_V2_l49-c64_TUTORIAL-DEBUG-WITH-SMALLDATASET-20200708-TRAIN/

total 540

-rw-r--r-- 1 root root 21416 Nov 2 08:32 all_variables_names.csv

-rw-r--r-- 1 root root 77687 Nov 2 08:32 prediction_e49_all.csv

-rw-r--r-- 1 root root 77687 Nov 2 08:32 prediction_e99_all.csv

-rw-r--r-- 1 root root 77687 Nov 2 08:32 prediction_e9_all.csv

-rw-r--r-- 1 root root 28 Nov 2 08:32 summary_layers_9.json

-rw-r--r-- 1 root root 109286 Nov 2 08:32 test_plot__.png

-rw-r--r-- 1 root root 54810 Nov 2 08:32 test_plot_e49_all.png

-rw-r--r-- 1 root root 54743 Nov 2 08:32 test_plot_e99_all.png

-rw-r--r-- 1 root root 54988 Nov 2 08:32 test_plot_e9_all.png

-rw-r--r-- 1 root root 5290 Nov 2 08:32 trainable_variables_names.csv

# 99ステップの学習の後の予測結果を表示させます。

$ less /var/data/smalltrain/results/report/IR_2D_CNN_V2_l49-c64_TUTORIAL-DEBUG-WITH-SMALLDATASET-20200708-TRAIN/prediction_e99_all.csv

FileName, Estimated, MaskedEstimated, True

/var/data/cifar-10-image/test_batch/test_batch_i9_c1.png_0,1,0.0,1

/var/data/cifar-10-image/test_batch/test_batch_i90_c0.png_0,0,0.0,0

/var/data/cifar-10-image/test_batch/test_batch_i91_c3.png_0,6,0.0,3

/var/data/cifar-10-image/test_batch/test_batch_i92_c8.png_0,8,0.0,8

- この結果の見方は次の通りです。

| DateTime(FileName) | Estimated | MaskedEstimated | True |

|---|---|---|---|

| /var/data/cifar-10-image/test_batch/test_batch_i9_c1.png_0 | 1 | 0.0 | 1 |

| /var/data/cifar-10-image/test_batch/test_batch_i90_c0.png_0 | 0 | 0.0 | 0 |

| /var/data/cifar-10-image/test_batch/test_batch_i91_c3.png_0 | 6 | 0.0 | 3 |

| /var/data/cifar-10-image/test_batch/test_batch_i92_c8.png_0 | 8 | 0.0 | 8 |

結果を表すそれぞれの行は、 “DateTime(FileName)"、“Estimated”、“MaskedEstimated”、“True” を表しています。 5つの数字で成り立っている"True” の部分はEstimateの数字がoutputを表し、最後の数字がtrue labelを表します。つまり、次のように結果を読み取ります。

この結果はincorrectを示しています。

```/var/data/cifar-10-image/test_batch/test_batch_i91_c3.png``` is ```6``` but the true label is ```3``` (incorrect)

この結果はcorrectを示しています。

```/var/data/cifar-10-image/test_batch/test_batch_i92_c8.png``` is ```8``` and the true label is also ```8``` (correct)